Discover the basic functioning of a website and the technologies used, and how to apply them to SEO

To be able to carry out technical SEO on a website, you need to understand the minimum basics of how it works. These are fundamentals that often fail within the industry.

In this post, I provide a brief summary of how the web has evolved and the technologies used to develop it. Therefore, even if this post may seem less directly focused on SEO, it can give us a broader picture of how websites work—the same ones we work with every day.

We’ll start from the beginning. To really understand how a website works, the easiest way is to know its history: how it started and how it has evolved over time. I’ll then focus on other posts more directly related to SEO, building on this foundational knowledge.

Web 1.0 sites were simple, static pages, with no dynamism or interaction with the user. They were like a storefront. They were simple fragments of code that made up the structure of a website in order to display text. We can say that in this type of website, communication flowed in only one direction (from the website to the user) and the user could not provide any kind of response to the information being sent from the site.

This means that websites functioned like flyers. There was no possibility of communication with the website administrators beyond displaying an email address or a phone/fax number and a physical address.

So, websites were focused on transmitting information in a one-way manner. In addition, on rare occasions, due to their static nature, these websites had assigned variables (variables simply consisted of assigning a name to a fragment of code or text). In fact, these types of pages were barely updated.

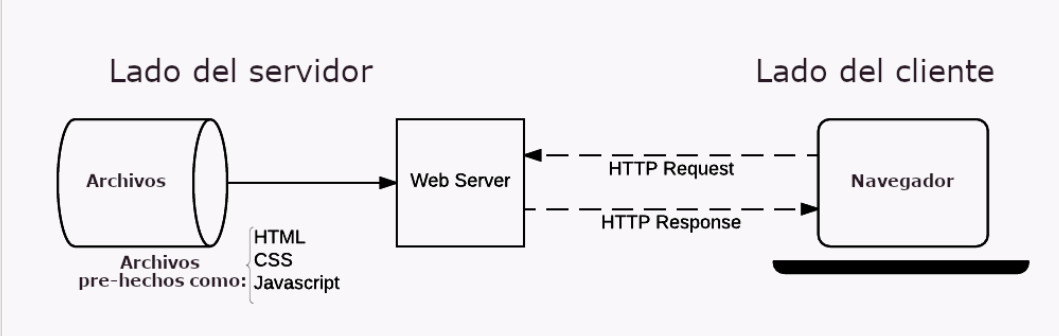

In short, we can say that a Web 1.0 site works as follows: all the code of the website is hosted on a server (which is simply a computer) with the necessary programs to process the site.

When a user reaches the domain (associated with an IP address through DNS) and a specific URL, the user, from their computer, makes a request to the server where the website is hosted, and the server sends back the necessary information so it can be displayed.

Due to how immature programming was at the time, the use of macros and iframes was common in order to reuse common elements across pages, such as the navigation menu.

Web 2.0 refers to websites where users began to interact with the site, and websites stopped being just a simple storefront on the internet.

This advancement allowed users to interact with the information displayed on the website, turning it into a real form of communication rather than mere passive observation.

To understand how this major advancement was achieved, it’s necessary to understand what a client-side language is and how users were able to communicate with the website.

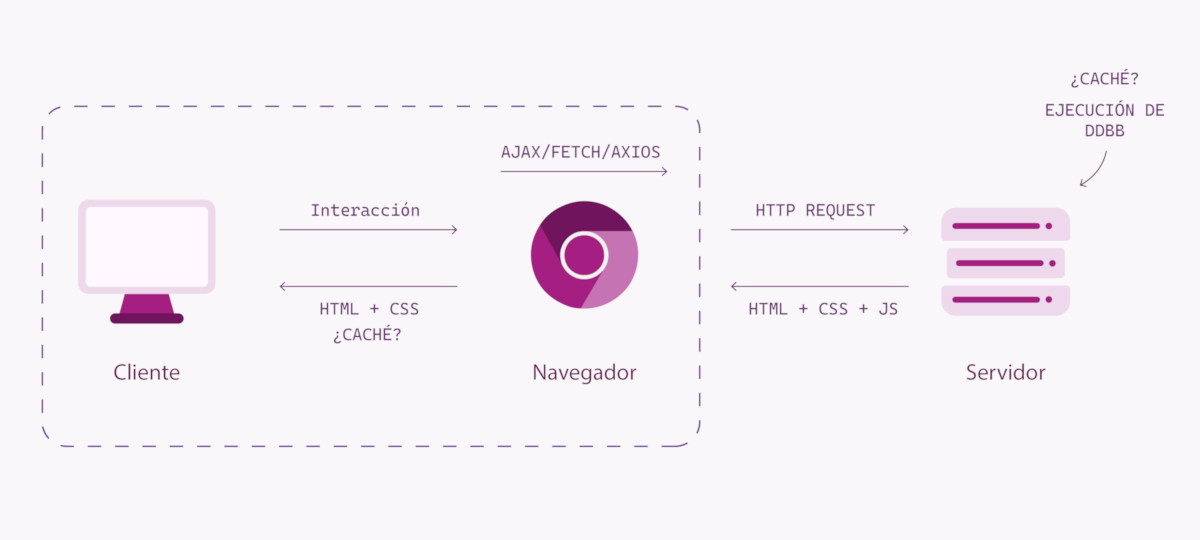

This diagram, although difficult to read, is very important not only for SEO, but for any criteria or diagnosis carried out by any professional working in a web environment.

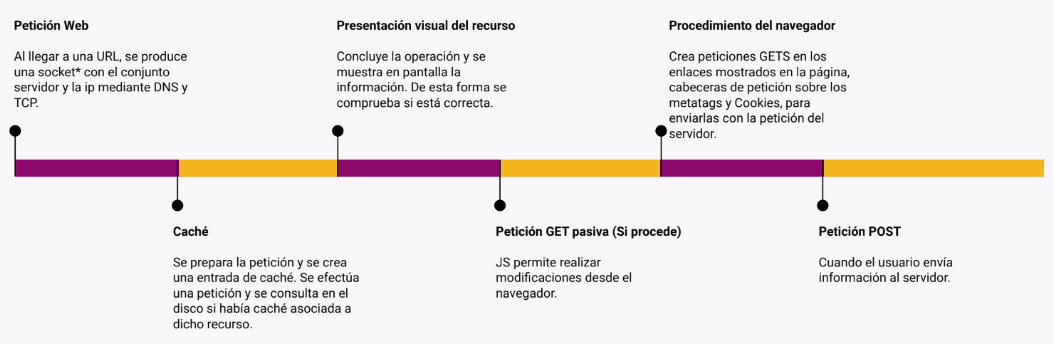

When we are on a device with a browser, we can be considered a “client”. When we enter a URL, with its domain, and submit it to the browser, the browser tries to retrieve information related to it. To do so, it has to go on quite a journey. This is what we call a request, because we are requesting information about the address we entered.

First, the browser needs to know where to look or what to do with the domain to which we are making the request, so the DNS comes into play. Basically, DNS retrieves the information about which IP address the domain points to.

The DNS is usually cached by ISPs (Internet service providers such as Movistar, Vodafone, or Orange). This means that when we make a DNS change, since it is cached to improve internet efficiency and speed, we must wait for the information to refresh before the change is reflected across the internet. This usually takes between 24 and 72 hours and must be carefully considered during migrations.

Once we reach the hosting server of the website, depending on the domain and the specified path, it will direct us to one location or another and return a response code. If it is 200, it means it will return additional information for that request.

The server will then process the information and execute it using the corresponding programming language and rules defined on the server, unless server-side caching is configured, in which case the server skips the “thinking” step and delivers the information directly.

The server then sends the “processed” information to the browser, that is, the multimedia content along with the website structure in HTML, CSS, and JavaScript (the only programming language that browsers process and render by default).

If the browser already has these files stored in the user cache, it won’t need to download them again, making the website faster.

It’s important to keep in mind that Google does not render websites the same way as a regular user, so as SEOs, we must consider how Google renders our pages when Googlebot accesses them.

Finally, once the URL is loaded, the user may make additional requests, such as filtering products from the database, which would involve contacting the database from the client side (in the example, this would be done using Ajax, Fetch, or Axios).

When a website is running, the process that takes place, explained in a very basic way, is as follows:

All programming languages that run on the server side are known as “server-side languages.” The most well-known is PHP.

There are other languages known as “client-side languages,” such as JavaScript. These languages, instead of running on the server that sends the information, run on the user’s device. This shifts the processing load from the server to the user’s computer, reducing server load but increasing client-side processing.

Technologies such as AJAX began to be used, allowing the client to send information to the website’s database, which, as mentioned earlier, enables users to send responses to the information displayed on the page.

AJAX has some limitations, and today the most commonly used technologies in modern implementations are Axios and Fetch. But that’s another story, so we’ll focus on AJAX as the foundation of how this process originally worked.

These technological advances are what made user interaction on the web possible, defining Web 2.0.

Now that we understand how a website works, let’s look at the types of technologies that can be used.

Another important part of the web, one that affects us from an SEO perspective, is the technologies used to develop websites.

There is a wide range of different technologies available for building a website. Each has its own advantages and disadvantages, and depending on the project, some technologies may be more suitable than others.

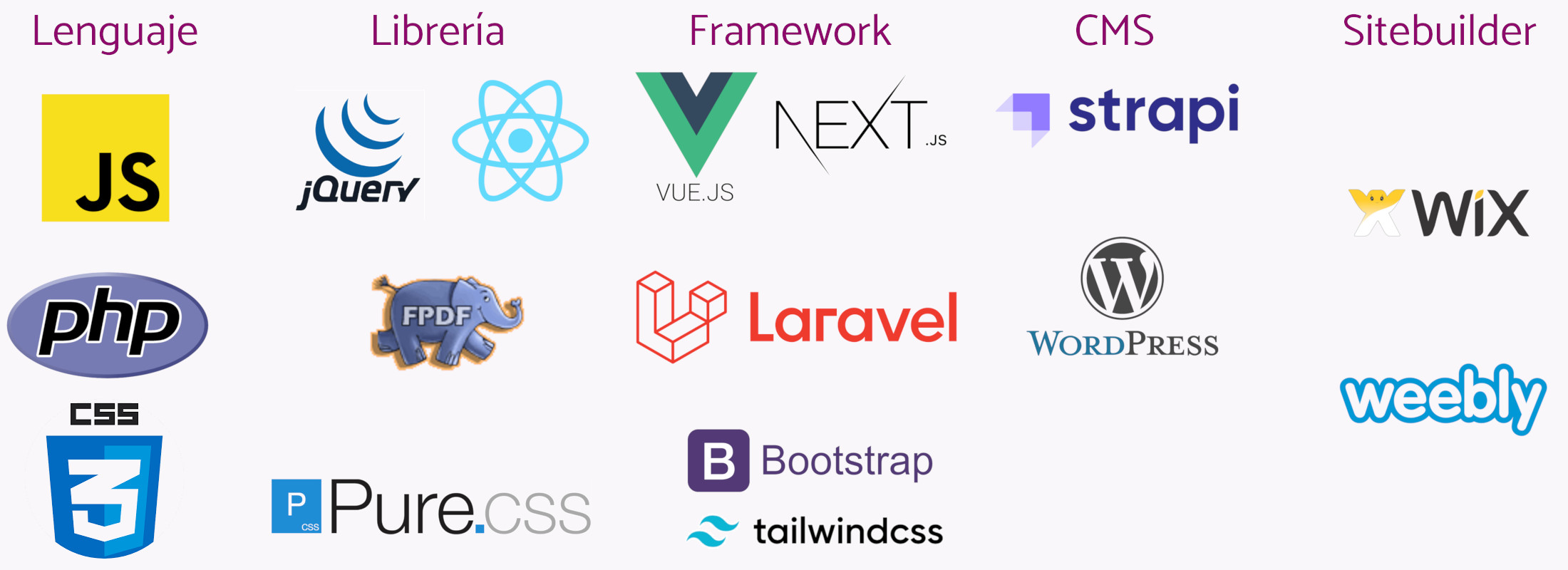

Technologies based on programming languages can be divided into Libraries, Frameworks, CMSs, and Site Builders. The line between these types of technologies is very thin, so let’s take it step by step.

Knowing which technology a website is built with allows us to quickly identify its strengths and weaknesses in terms of SEO. This helps us maximize its potential at any given time.

A library is a set of functions that you can call from any project. This set of functions can be adapted to an existing ecosystem. For example, jQuery is one of the most well-known (and most disliked) libraries that can help you create a slider without having to write all the code from scratch. There are also PHP libraries that make it easier to generate PDFs, and they can be included and used in any project where code can be modified.

jQuery holds a special place for me, as it allowed programmers to avoid going crazy when each browser interpreted JavaScript differently (before standardization) and jQuery made it much easier to write code compatible with all browsers at the time, as well as simplifying the use of AJAX.

A Framework, on the other hand, provides an entire web ecosystem and structure. They often come with certain libraries included by default, making the task of building a website easier, faster, and more organized.

Then there are CMSs, like WordPress, which many of you are already familiar with. However, there are many types of CMSs and different ways to use them. Subdivisions exist, such as CMF or Headless CMS, though making these distinctions can be difficult, as it heavily depends on how the technology is used.

We can find CMSs like Strapi, which is based on Node.js and provides only the back-end, while other types of CMSs function almost like Sitebuilders.

In fact, for example, WordPress itself, when editing its theme directly, can be used as a CMS with just the back-end, almost like a framework, or it can also be used as a Sitebuilder, using Blocksy or Elementor, building the website in blocks without needing to code.

Finally, there are the aforementioned Sitebuilders, like Weebly or Wix. Generally, the websites you create with them don’t even truly belong to you. They are hardly scalable and offer very limited customization.

To analyze what a website is built with, we can use tools like BuiltWith or Wappalyzer. Each of these tools, in its own way, uses certain methods to detect how a site was constructed.

Knowing how SEO works without relying on plugins gives us greater flexibility across these technologies. Something that all major companies actively value.

Some of these technologies add an extra layer to how websites function today. But with this basic knowledge, we can get a pretty good idea of how a website was built.

This will be important for understanding which aspects of these technologies we need to consider as they impact SEO.

I currently offer advanced SEO training in Spanish. Would you like me to create an English version? Let me know!

Tell me you're interested