Learn how to do SEO for +18 or paid websites

In the world of SEO, we may encounter significant challenges. One of them is working on projects that, due to legal reasons or company policy, contain content that is not accessible to all users—for example, adult content or premium content only available through payment.

In these cases, if we want to rank these pages, we need to ensure that users who should not see the content are blocked from accessing it, while still allowing Google to see our content so it can crawl and index it.

To prevent Google from ever displaying our exclusive content in search results, it is recommended to use the noarchive value in the robots meta tag:

<meta name="robots" content="index, follow, noarchive">

It is also advisable to use the data-nosnippet attribute if you do not want unwanted content to appear in the SERPs (search engine results pages).

Adult content can take many forms, including pages with sexual content, violence, alcohol, etc. Generally, the most common practice to limit access to these pages is by preventing user access through a pop-up or form that asks for age verification.

If this type of access is presented to Google, it is unlikely to be able to crawl, render, and index the site correctly. Ideally, you should allow Google to access the content without requiring age verification.

For content behind a mandatory age gate, we recommend allowing Googlebot to crawl your content without triggering the age gate. You can do this by verifying Googlebot requests and serving the content without age gate.— Google Developers: Safesearch

To implement this, you can create an exception for Googlebot user-agents, or even allow access only from Google’s IPs, so it can browse the site without restrictions even if other users are blocked.

In some cases, users are required to register to view website content. In that case, you should use structured data for paywalled content, or apply the same approach as with the age verification pop-up.

It is important to leave these pages accessible to Google if you intend for them to be crawled and indexed. If registration is required to access a page, Google will encounter a website it cannot display.

Some pages contain content that goes beyond standard adult content, such as alcohol, gambling, or other substances, and includes even more sensitive material like pornography, violence, or gore.

In these cases, Google applies the SafeSearch filter, since certain users prefer not to encounter these types of results in their searches.

Any type of nudity (including realistic dolls), escort services, violence, or even pages linking to such content, can be filtered for these reasons. This is one of the cases where link obfuscation can make sense.

For highly explicit websites, it is sensible to implement several practices. Let’s go through them in detail.

This meta tag helps Google understand which pages contain highly specific content. This way, Google can take it into account.

This meta tag makes sense for explicit content and should only be used on pages containing such content.

<meta name="rating" content="adult">

Or another, more complex and equally valid option:

<meta name="rating" content="RTA-5042-1996-1400-1577-RTA">

Update 19/01/2023: It will be necessary to monitor the upcoming law on adult content, which aims to require users to verify age with an ID. In my opinion, if this happens, it should be treated similarly to paywalled content.

This makes sense if there is a significant amount of explicit content alongside non-explicit content.

It is recommended to group this type of content into a specific directory or subdomain. This makes it much easier for the search engine to separate content.

If there are only a few explicit pages relative to the rest of the site, grouping is not necessary, and the specified meta tag will suffice.

Google must be able to access the video URL. It is also recommended to use structured data or a video sitemap to facilitate this process.

Google will not expose the video URL directly, but the page where the video is displayed. You can check more technical information in the official documentation, as it is extensive.

For various reasons, some websites block users accessing from specific countries. In the case of location-based blocking, the implementation is similar. Countries can be blocked for legal reasons, including the United States, but Googlebot (the User-Agent) should never be blocked in the United States.

As each blocking system is unique and often relies on additional libraries, the best practice is to allow Googlebot, or Google’s IP range (via GeoIP, for example), to access the content without restrictions, enabling it to crawl the page and making it indexable.

Here you can see statements from John Mueller advising not to block Googlebot even if the country is blocked. In other words, whitelist Googlebot so it can crawl and interpret the content.

This makes sense. In any case, we can check if Google is accessing the pages through Search Console and verify its effectiveness by analyzing logs.

If there is no intention to block content by country, you should never redirect users based on their IP to select a language. This is bad practice because it prevents search engines from crawling all language versions correctly. Instead, you should implement proper hreflang configuration.

Subscription or premium content is content that the user can only fully access if they subscribe to the site.

Since the content cannot be fully viewed, Google may interpret this as cloaking. To prevent this, structured data can be used to allow Google to crawl and index the content.

The best way to implement this is to wrap all content that should only be visible to subscribed users inside a container (like a div or section) with a specific CSS class of your choice.

Here is an example with two lines that should only be visible to registered users:

<body>

<section id="content">

<div> Lorem ipsum....</div>

<div class="premium">This content should only be visible to subscribers.</div>

<div class="example">This content is for another example.</div>

</section>

</body>

We can imagine that we don’t want the content inside the element with the class premium to be visible. Typically, this is hidden using CSS or JavaScript. However, it could also be done server-side, as long as Googlebot can see it without needing a subscription. (In my Cloaking article and in my Technical SEO master's program, I teach how to implement this practice).

Until now, if we do this, it could be considered a bad practice. To make it acceptable, we need to involve Google and let it know what type of content we are providing through structured data.

This structured data snippet can be added to any of these popular structured data types:

There are several options (these are my recommendations), and for clarity, I’ll choose one type of structured data and apply it in an example.

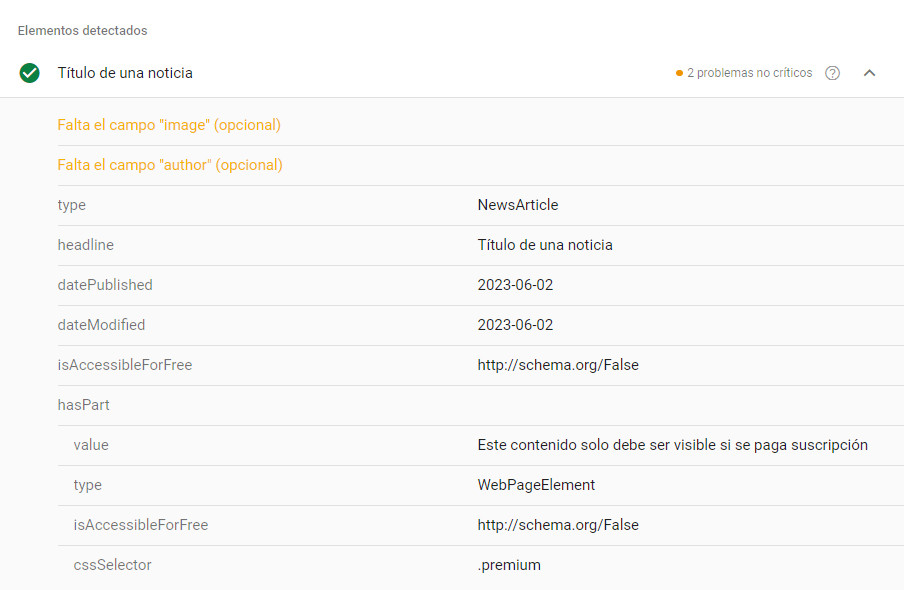

Since NewsArticle is relatively simple to implement and has few required properties, let’s assume it is included on a website that contains the code above (with the premium and example CSS classes). Here’s an example of a NewsArticle structured data snippet:

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "NewsArticle",

"headline": "News Title",

"datePublished": "2023-06-02",

"dateModified": "2023-06-02"

}

</script>

In this snippet, we are telling Google that we have an article titled "News Title" that was published on June 2, 2023.

If it were a paid article (which is the focus here), we would need to add the property isAccessibleForFree with a Boolean value of False, and include it in a property called hasPart. We must also specify within this property which element and which class are not accessible for free. In our example, this is the content inside the

Thus, the snippet would contain the following information:"isAccessibleForFree": "False",

"hasPart": {

"@type": "WebPageElement",

"isAccessibleForFree": "False",

"cssSelector": ".premium"

Since this script cannot stand alone, we need to combine it with the one representing the content. In this case, the NewsArticle JSON. Our final code would look like this:

<body>

<section id="content">

<div> Lorem ipsum....</div>

<div data-nosnippet class="premium">This content should only be visible to subscribers.</div>

<div class="example">This content is for another example.</div>

</section>

</body>

<!-- Now the json -->

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "NewsArticle",

"headline": "News title",

"datePublished": "2023-06-02",

"dateModified": "2023-06-02",

"isAccessibleForFree": "False",

"hasPart": {

"@type": "WebPageElement",

"isAccessibleForFree": "False",

"cssSelector": ".premium"

}

}

</script>

This way, the div element with the class premium is hidden from users without a subscription but remains accessible and crawlable by Google.

It’s important to note that this method should be applied to div elements, and the cssSelector must target a class. As shown in the code example, a dot . is placed before the class, just like a CSS class selector.

When analyzing structured data, it should appear like this:

It is important that Google can crawl and view the content intended for indexing normally. Properly implementing this practice is not cloaking; it simply gives Google access to content intended to be indexed and crawled, even if it is not free.

In many cases, even if not perfectly implemented, the content is visible and crawlable without JavaScript. Due to the way Google crawls content without JavaScript, it may also not be detected as cloaking. In this case, any user who disables JavaScript can still see the content. This is not a problem, as only a small minority of users know how to do this.

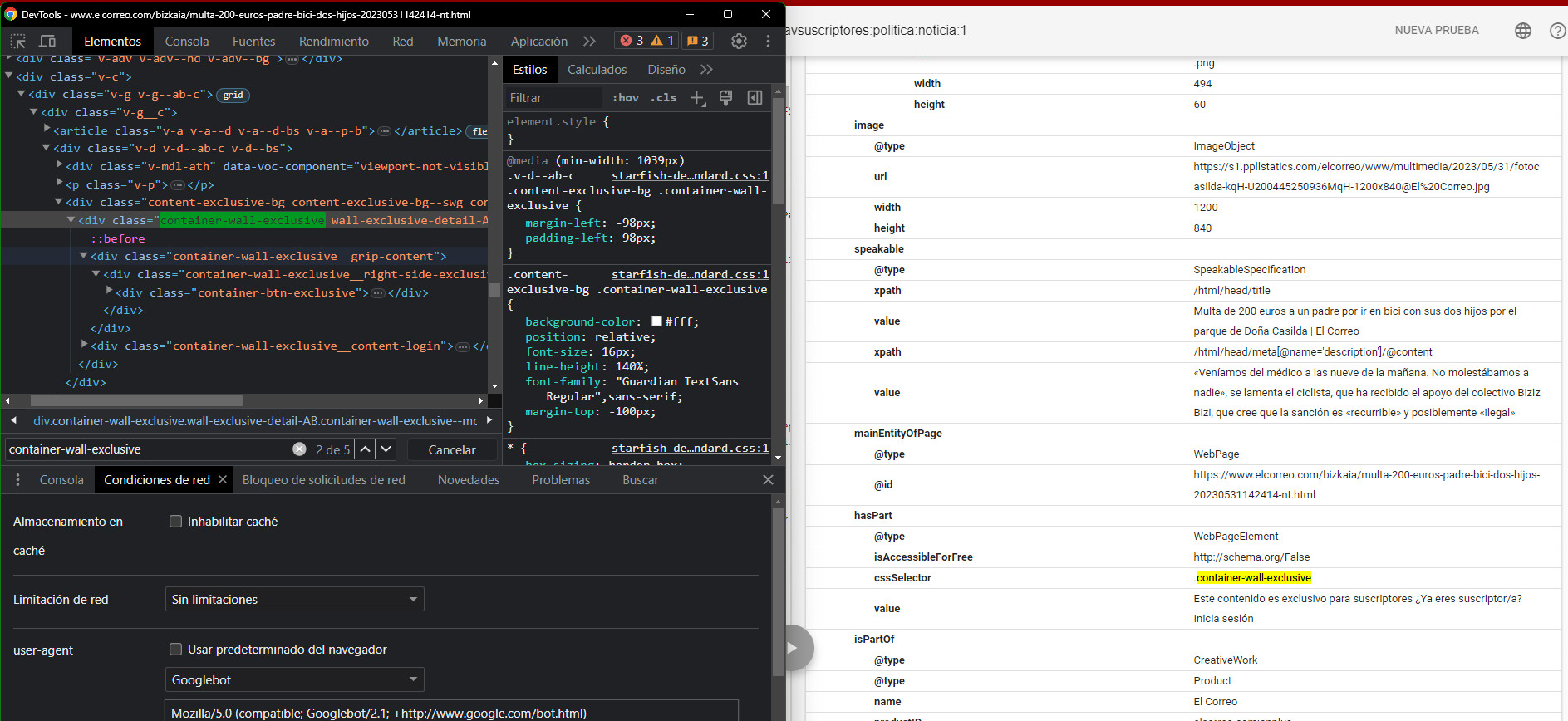

In the following example, we can see how this site implements it in an apparently correct way:

Here, all content within the div element with the class container-wall-exclusive can be hidden from users who have not paid, but in theory, Google will still have access to it.

I currently offer advanced SEO training in Spanish. Would you like me to create an English version? Let me know!

Tell me you're interestedIf you liked this post, you can always show your appreciation by liking this LinkedIn post about this same article.