Documented evidence of a persistent 5xx error in a robots.txt file

I understand this is an unusual question and in fact this is not a post about a common case.

However, it's a doubt that arose from an inconsistency I read in Google's documentation and from a post by Gary Illyes himself, a Google representative. His post said the following:

A robots.txt file that returns a 500/503 HTTP status code for an extended period of time will remove your site from search results, even if the rest of the site is accessible to Googlebot. The same applies to network timeouts. — Gary Illlyes

Curiously, when you went to check the documentation, it said that when the robots.txt had a 5XX error for a long time, it was taken as if there were no crawl restrictions and at the same time, 2 paragraphs later, it was written that it was considered that the entire site was inaccessible.

This is clearly contradictory, in case this happens:

Obviously, both issues are clearly contradictory, and this needs a deeper explanation.

Logic and experience told me that Gary Illyes was wrong, even comments agreeing with me. But you have to prove these issues with research, not with words.

So I turned to the person in the industry I know who most enjoys doing experiments with Google, MJ Cachón, who listened to my proposal and wanted to collaborate with me on the research and gave me access to the website https://rana.ninja/.

My premise was the following:

When the robots.txt has a 5XX it uses its last cached version, which is maintained for one month. After that month, the robots.txt is taken as a 4XX and it is understood that there are no crawl restrictions. As stated in one of the versions of the official documentation.

However, let's see what exactly happens. Please, never do these experiments on production projects that cannot risk being lost.

Shortly after starting the research, Google changed the documentation.

Although Google did not announce the changes, which continue to be contrary to Gary Illyes' premise in his post, these are the changes that occurred in the official documentation about 5XX in robots.txt in December 2024.

| Situation | Before (17/12/2024) | Now (24/12/2024) |

|---|---|---|

5xx or 429 error when requesting robots.txt | Google interprets as if the entire site were temporarily blocked. | Google stops crawling the site for 12 hours, but continues trying to obtain the robots.txt. |

30 days without being able to obtain robots.txt | Uses the last cached version. If there is no copy, assumes there are no restrictions. | Uses the last cached version. If there is no copy, keeps trying, with behavior depending on site availability. |

| After 30 days | Continues assuming no restrictions if there is no cached copy. | Google assumes there is no robots.txt and continues crawling normally, but keeps trying to obtain it. |

From my point of view and what we have gathered from information, it does seem there is crawling of the rest of the website from the beginning. As the documentation says, the rules that were in the last robots.txt stored in cache are respected for one month and then there is no restriction whatsoever.

Let's go to the documented evidence.

We start from the beginning.

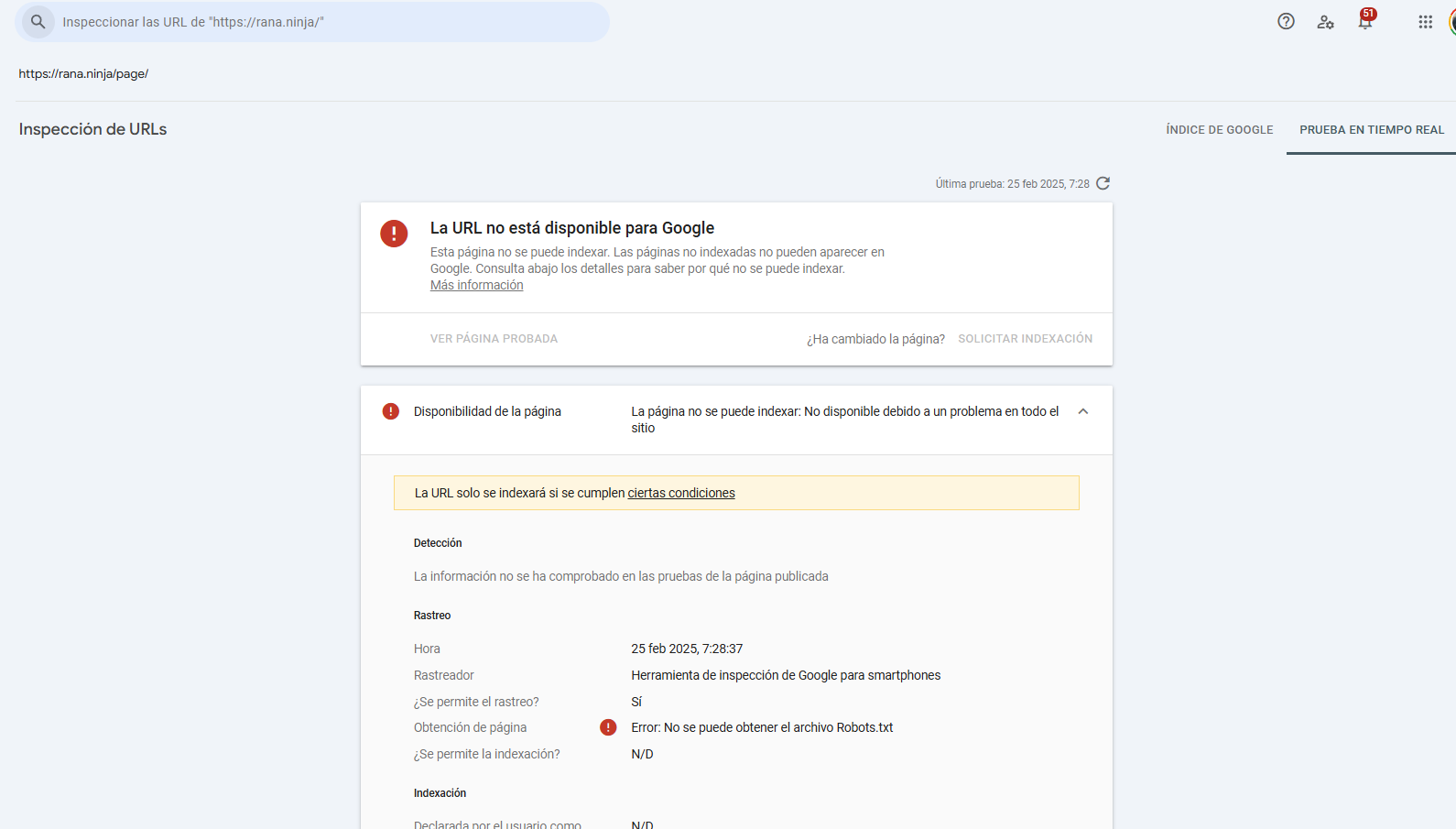

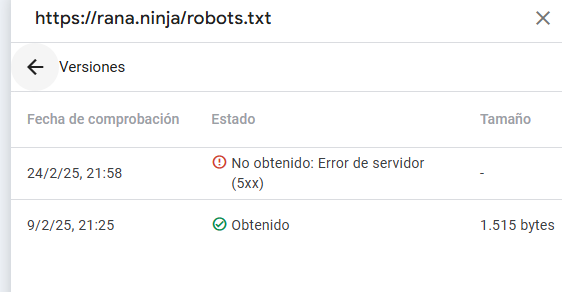

Cachón blocks his website's robots.txt with a 5XX, with the last crawl and record that Google has of his robots.txt being 09/02/2025.

This is how the last version cached by Google of Cachón's robots.txt looked:

User-agent: *

Disallow: guia-definitiva-de-screaming-frog-impulsa-el-seo-de-tu-web/

Disallow: guia-screaming/

Disallow: 13-consejos-screaming-frog-que-debes-saber/

Disallow: wp-content/themes/twentytwenty/assets/js/index.js?ver=2.0

Allow: /wp-content/uploads/*

Allow: /wp-content/*.js

Allow: /wp-content/*.css

Allow: /wp-includes/*.js

Allow: /wp-includes/*.css

Allow: /*.css$

Allow: /*.js$

Disallow: /cgi-bin

Disallow: /wp-content/plugins/

Allow: /wp-content/plugins/*.jpeg

Allow: /wp-content/plugins/*.png

Allow: /wp-content/plugins/*.js

Allow: /wp-content/plugins/*.css

Disallow: /wp-content/themes/

Allow: /wp-content/themes/*.js

Allow: /wp-content/themes/*.css

Allow: /wp-content/themes/*.woff2

Disallow: /wp-includes/

Disallow: /*/attachment/

Disallow: /tag/*/page/

Disallow: /tag/*/feed/

Disallow: /page/

Disallow: /comments/

Disallow: /xmlrpc.php

Disallow: /?attachment_id*

Disallow: *?

Disallow: ?s=

Disallow: /search

Disallow: /trackback

Disallow: /*trackback

Disallow: /*trackback*

Disallow: /*/trackback

Allow: /feed/$

Disallow: /feed/

Disallow: /comments/feed/

Disallow: */feed/$

Disallow: */*/feed/$

Disallow: */feed/rss/$

Disallow: */trackback/$

Disallow: */*/feed/$

Disallow: */*/feed/rss/$

Disallow: */*/trackback/$

Disallow: */*/*/feed/$

Disallow: /*/*/*/feed/rss/$

Disallow: /*/*/*/trackback/$

Sitemap: https://rana.ninja/sitemap_index.xml

Sitemap: https://rana.ninja/post-sitemap.xml

Sitemap: https://rana.ninja/page-sitemap.xml

So as an experiment, we'll test the crawling of https://rana.ninja/page/ which has to be blocked according to the last version, and in fact that's what happens, despite there being a 5XX on the robots.txt

You can also verify that even when testing in real time, the URL cannot be crawled despite the 5XX of the robots.txt

The first days after leaving the robots.txt with a 503, this is what happens:

Apparently Google continues to respect the robots.txt directives

The only noticeable difference after these days is that Google Search Console has removed the oldest cached version:

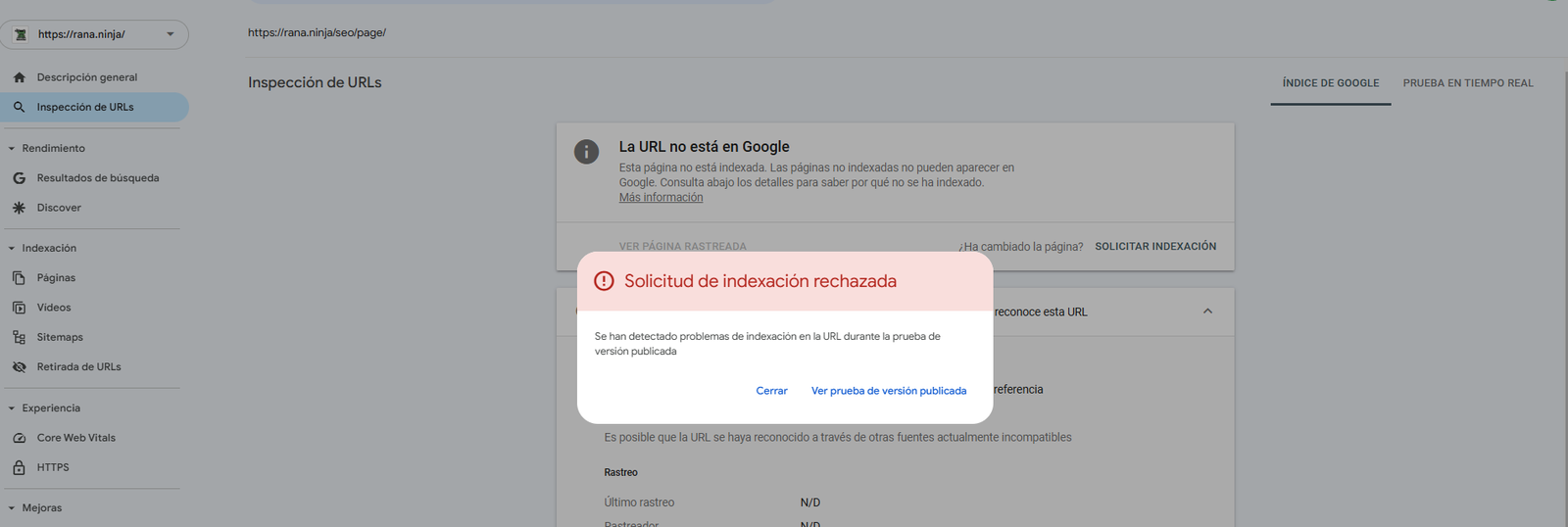

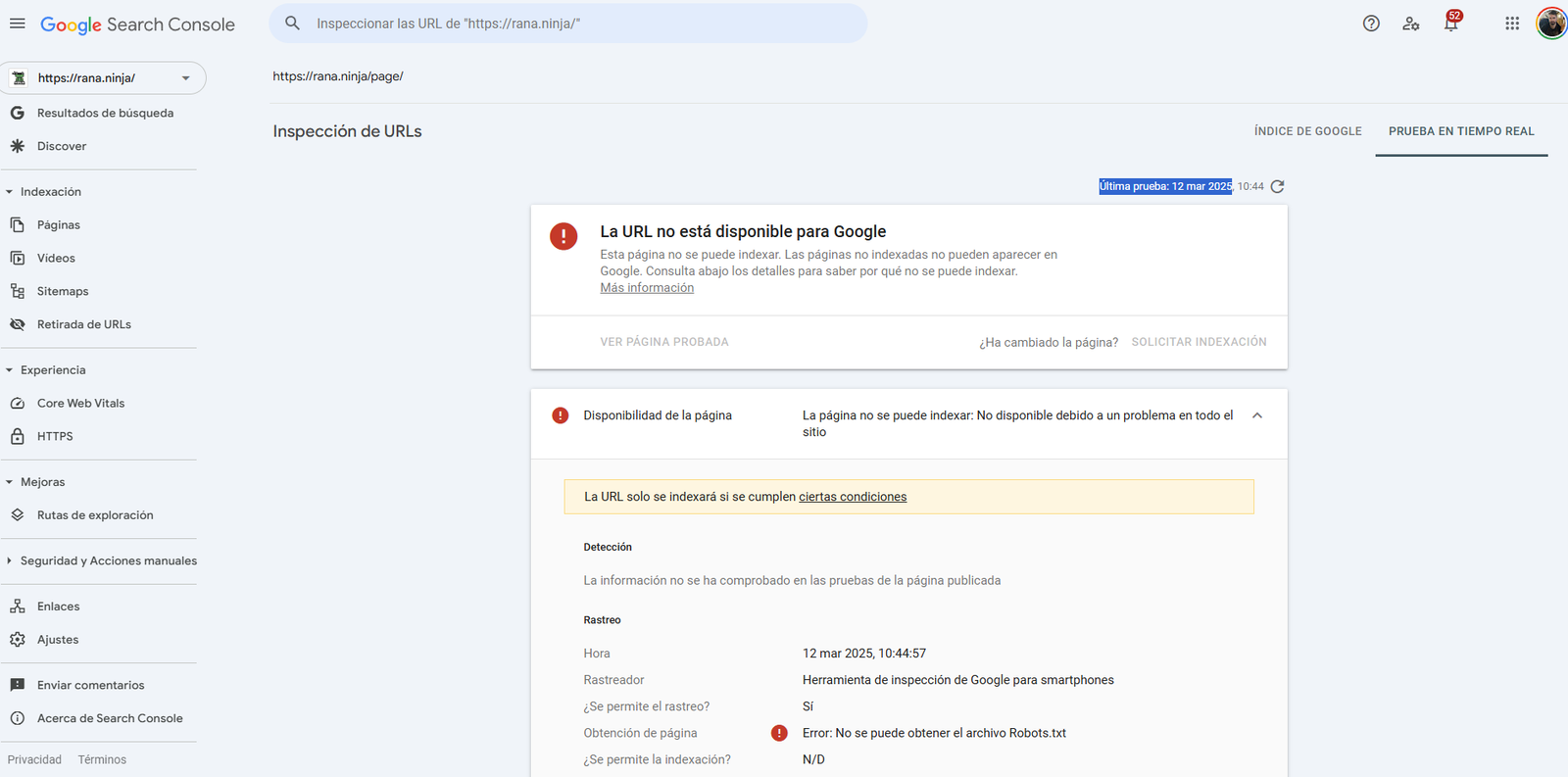

We wanted to check if the disaster that Gary Illyes announced on the web would occur, but after several months, what happens is the following:

The cached robots.txt history disappears:

The website content is totally crawlable and indexable:

The page https://rana.ninja/page/ doesn't appear as blocked by the robots.txt:

BUT, it doesn't allow indexing:

After a few months of the test, indexing drops dramatically:

Apparently, although the documentation says that it is taken as if there are no restrictions with the robots.txt and that everything can be crawled, it seems that deindexing is something that happens progressively after the continued 5xx error in the robots.txt.

And although there are URLs that continue to be shown in Google:

It's only a matter of time before this fatal deindexing spreads throughout the entire website:

What we think can be better or worse. And although this case is somewhat marginal, these are the conclusions of the study when this happens:

Edit 26/05/2025: In the following image we can observe that once the robots.txt was restored, the pages return to being indexed normally.

I currently offer advanced SEO training in Spanish. Would you like me to create an English version? Let me know!

Tell me you're interestedIf you liked this post, you can always show your appreciation by liking this LinkedIn post about this same article.