Diferentes formas de renderizar una página web como frameworks de js y cómo esto afecta al SEO

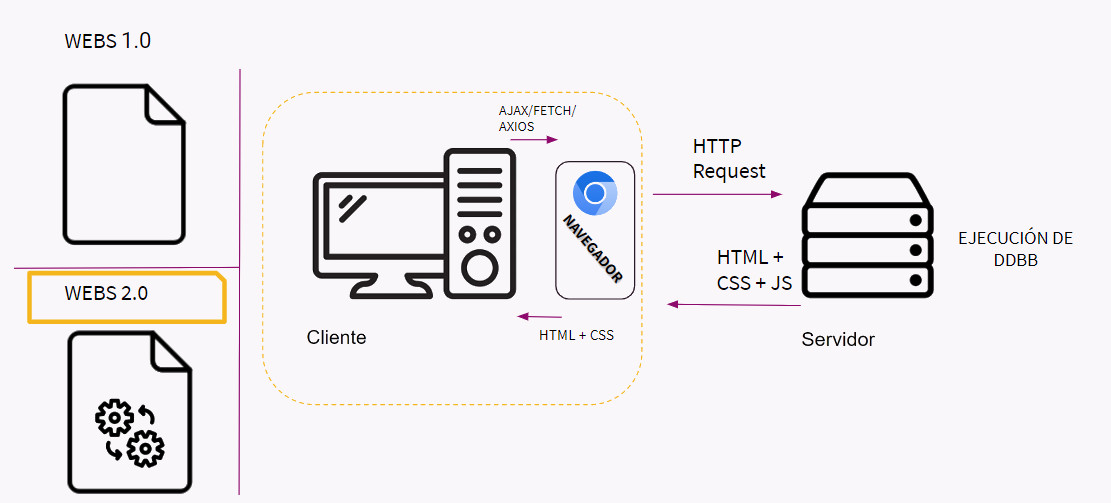

JavaScript is primarily a client-side programming language. This means that, with a few exceptions, the language is processed in the user's browser, not on the server. This has a major advantage because it allows for a certain degree of dynamism on the website. Furthermore, even if the website receives many requests, the JavaScript processing load is borne by each user who makes the request. This allows for some relief for the server and also allows for very good speed (depending somewhat on the user's device).

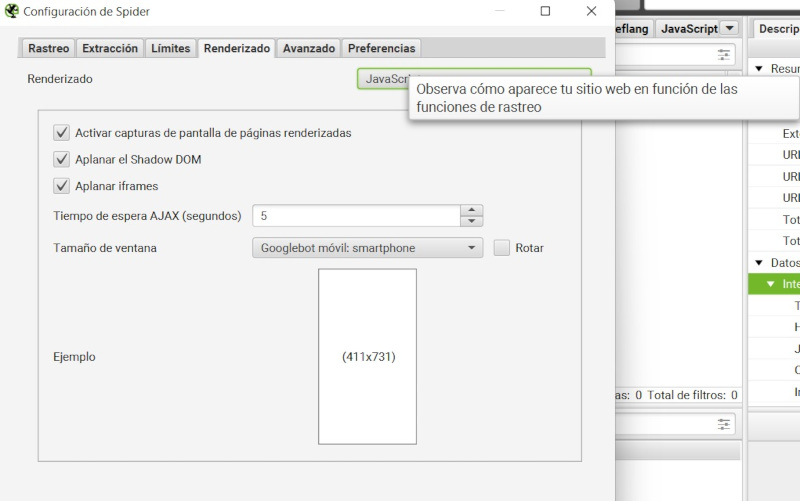

The problem is that crawling a website with all its pages requires a lot of time and resources. An example can be done by crawling a website with or without JavaScript using ScreamingFrog to see which takes longer.

For this reason, it's logical that crawlers like Googlebot, which have to crawl millions of pages, make the first passes on a website without JavaScript, so they can process all the information quickly. It's not that Google isn't capable of processing JavaScript; it's just that it consumes more resources, and so it does it occasionally.

Consequently, all elements generated by JavaScript are likely to be ignored or unread by search engines when rendering. This is a problem, given that many websites use this technology precisely because of the performance boost it offers.

Today, there are a large number of powerful front-end frameworks . Most powerful websites use these frameworks, especially when they require multiple features, and they stand out for the excellent speed performance they achieve for the user.

For those who feel lost about what a Framework is, I’ll share this excerpt from another post:

A framework is a structure with a set of pre-made codes that establish a working environment, avoiding starting from scratch, thus standardizing the way of doing things in the project and ensuring its scalability.

— Carlos Sánchez in Frameworks in SEO .

Another day I'll go into more detail about frameworks like Vue.js, libraries like React (there's a debate about whether it's considered a framework or a library, which I won't go into), and their counterparts. However, in this post, I'm going to focus on something common to all of them: the type of rendering that must be taken into account. In short, rendering is the loading process that occurs to display content to the user.

Having said all this, it doesn't mean that these frameworks aren't useful for ranking in Google—quite the opposite—but the way content is rendered must be optimized so that it's readable and easily crawlable by all search engines.

The most common forms of rendering are:

| Type | Description | SEO Advantages | Considerations |

|---|---|---|---|

| CSR | Render in the browser with JS | Flexible, fast if managed well | Risk of empty content for Google if JS fails |

| SSR | Renders to the server on every request | Visible and indexable content from the start | Loading to server; requires hydration for SPA |

| Dynamic rendering | CSR for users and SSR for bots | Currently none | Obsolete and discouraged by Google |

| Hybrid | Combine CSR, SSR and SSG as appropriate | Very flexible depending on the content | Complex to implement and maintain |

| SSG | Generate static HTML in build | Very fast and efficient | Not suitable for dynamic content |

| DSG | Render on demand and cache | Web speed | Less customizable than ISR |

| ISR | Renders statically and revalidates on access | Website speed, scalability, and customization | Best option if you can choose for a large project |

I'm adding an example of a page with Client-Side Rendering so you can check how it works correctly throughout the explanation.

JavaScript has historically been known for its ability to be a programming language from a user's perspective.

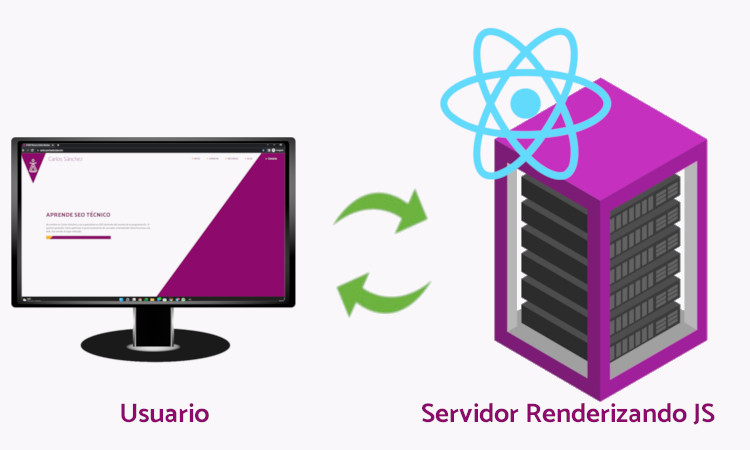

This means that this programming language can render in the browser (and in fact that is how it works by default).

CSR takes this to its ultimate level. By creating the entire front-end of a website using JavaScript, all content, including HTML tags, images, and CSS , is loaded using JavaScript.

This has a number of advantages and disadvantages, but the SEO disadvantages are of enormous importance.

The advantage could be considered that if each user does the entire loading process from their own computer, although the speed of the website is subject to the computer each user has (in general websites do not consume many resources when processing), with this practice there is a much lower load on the server.

However, the downside is quite significant. If we check the example page I added, we can see that whether we consult the source code or run a standard crawl with Screaming Frog, no internal links, meta tags, or content are detected.

This happens for a simple reason. The content is crawled or checked before that JavaScript rendering. This also usually happens when Google crawls our website.

Even if Google uses the latest version of Chromium , it won't be able to properly crawl these types of websites at first. According to its documentation, it will put all indexable pages in the rendering queue. Only once Google's resources allow it (largely depending on the crawl budget, so that's already a bit of a drawback), Google will analyze it with a headless version of Chromium that renders the page and executes JavaScript. Then, after all this process, Googlebot will have to analyze the rendered HTML once more to look for links, and if there are any, it will put them in the crawl queue.

As we can see, it's a process that requires three crawls to understand the page. Crawling this type of page is a slow and tedious process that can be a major impediment for projects that are just getting started and a competitive disadvantage for projects that have already begun.

This can be verified with Screaming Frog itself using the rendering configuration via JavaScript. This is the only way Screaming will be able to read pages like the one shown in the example, but it will take much longer to load, just like Google (to understand the parallelism).

Finally, Screaming Frog, all things considered, is a Spider like Google, and the operating principle is similar. At least in that if Google crawled all websites with that default rendering configuration, the process for both the websites and Google's own servers would be much more complex and cumbersome.

Historically, these types of implementations have been a real pain. However, according to Martin Splitt , who stated emphatically at a SOB2023 conference, Google crawls and understands JavaScript perfectly, and that there would only be a problem if developers were extremely creative enough to make the website incomprehensible. However, CSR shouldn't pose any problems .

If you want to learn how to apply all this and much more, access my training:Learn real SEO!

You need to be very careful to leave all indexable pages in the plain HTML, and even avoid adding the canonical tag and then adding it in the render. This is to avoid practices like adding the canonical tag to the home page in the static section. If Google doesn't consider content indexable, it won't render it .

Special care must be taken with non-indexable pages on this type of website, as Google won't make the effort to understand JavaScript on a page that doesn't allow indexing.

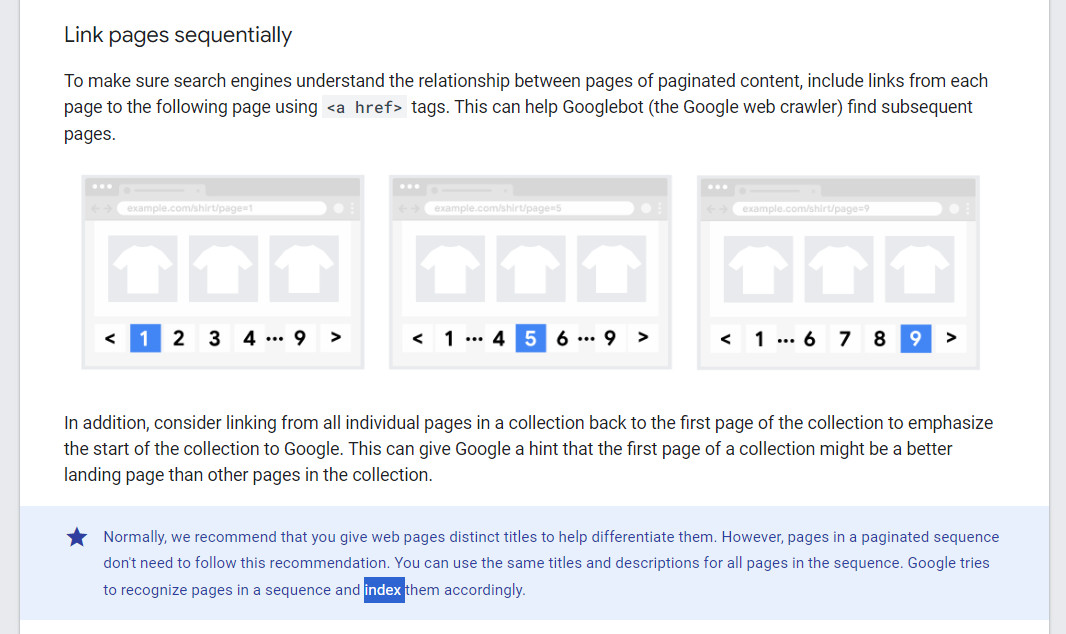

If Google's criteria of not indexing pages with sorting criteria are followed (this is contradicted in the documentation itself and there is still a wide debate about these practices), we run into a problem: the page would then be crawled without rendering, so the links would not be followed.

While it is true that noindex pages lose importance when it comes to crawling and end up becoming nofollow , in the case of a page with CSR it is brutal, because Google would directly not be able to see or crawl the links that are generated by JS, that is, all of them.

In either case, even though it's like a nofollow in the long run, with this type of rendering, the links wouldn't even exist from minute 0.

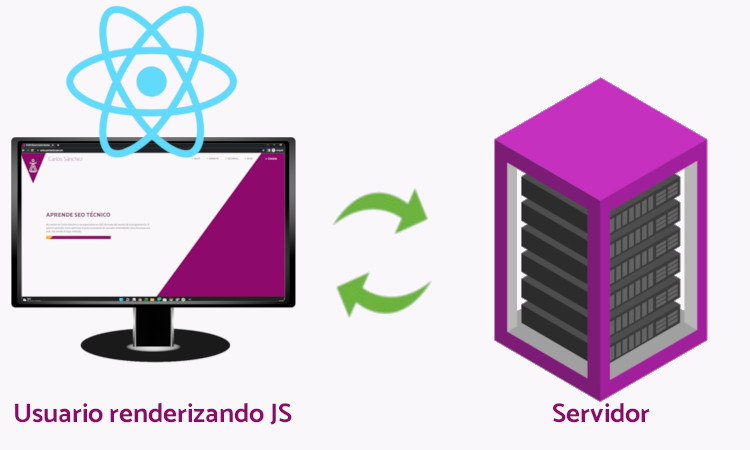

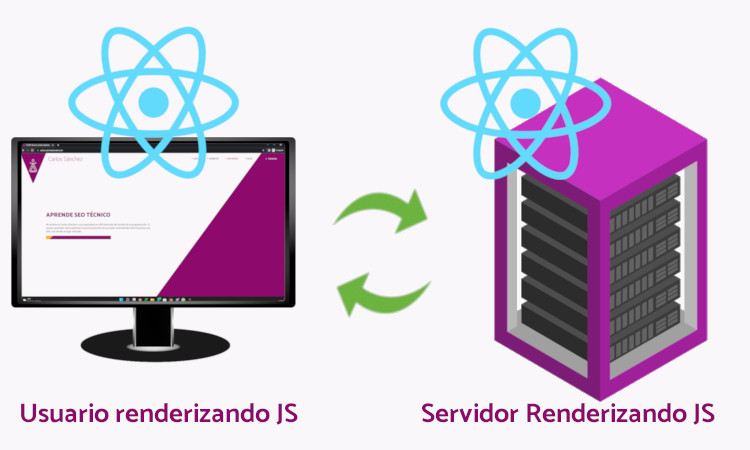

Unlike user-side rendering, SSR effectively renders JavaScript server-side whenever the user makes a request. This ensures that all information is crawlable and understandable by search engines, as the JavaScript will already be rendered when the request is made.

Server Side Rendering is a pre-rendering method that generates new HTML from the server with each request (Unlike Static Rendering ).

Server-side rendering isn't a silver bullet: its dynamic nature can put a significant load on the server. It typically renders/rebuilds the same application multiple times, once on the client and once on the server. Just because server-side rendering can render something faster doesn't mean the server has less work to do. In fact, on websites with a lot of visitors, you should have a powerful server or a good CDN to avoid overload .

Even if the home page loads very quickly, due to the number of requests and full page reloads, in addition to a certain limitation of interactions, it would be a limiting rendering option.

However, it's the primary and most common implementation solution when working with a JS framework. It instantly solves the indexing problems this technology can cause.

In basic terms, this technology redirects crawlers to a version of the website (using the user agent, for example) with a renderer and displays a static HTML version of the website, while the user is directed directly to the regular version with the CSR.

It's important to note that Googlebot doesn't consider dynamic rendering as cloaking , as long as both versions have the same content. As stated by Martin Split himself.

Googlebot generally doesn't consider dynamic rendering as cloaking. As long as your dynamic rendering produces similar content, Googlebot won't view dynamic rendering as cloaking.

— Martin Split .

Error pages that may be presented in dynamic rendering will also not be considered covert and will be considered error pages.

What is considered concealment within this practice is offering completely different content to users and trackers.

The practice of using dynamic rendering to serve completely different content to users and crawlers can be considered cloaking, which is why Google penalizes it; for example, if you serve a page about cats to users and one about dogs to crawlers.

All SEO content must be included in the dynamically rendered HTML of the content.

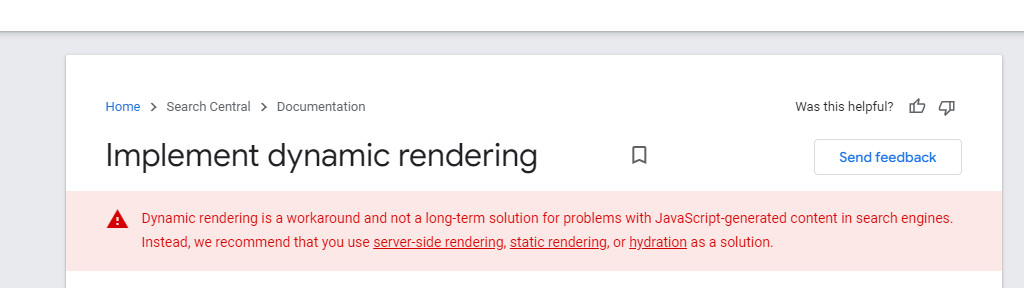

August 10, 2022 : Google has updated its documentation indicating that dynamic rendering is a patch, not a long-term solution, to the problem with JavaScript-generated content facing search engines. They recommend using any of the rendering methods explained below in this article.

June 19, 2023: Martin Splitt says it no longer makes sense to use Dynamic Rendering

Hybrid rendering, also known as Rehydration, is a mix of CSR and SSR at will, meaning some parts of the website are only displayed at the user level and other parts at the server level.

While Dynamic Rendering displays one version to users and another to selected bots, the same version is shown to both search engines and humans. Therefore, anything that affects SEO should be loaded as SSR , while dynamic content that isn't SEO-friendly can be loaded using CSR .

This way, you can capture the content necessary for SEO, such as meta tags and static content through SSR, and those features that require a lot of dynamism through CSR.

Faster content and link discovery for search engines.

Content is available to users faster.

Personalization of SSR and CSR content.

Allows interactivity for users.

It can be complex to implement. And that takes time.

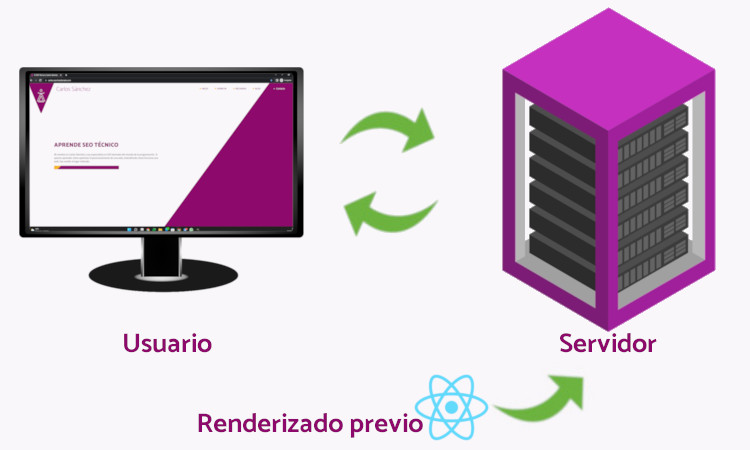

Static rendering is the pre-rendering method that generates HTML at deploy time, and that rendering is "reused" for each user request.

The only potential disadvantages of these rendering methods are the deployment time and the time required to generate certain content, but these aren't significant drawbacks that affect usability or SEO. However, this rendering method offers numerous advantages.

This is the rendering method typically used in frameworks like Gatsby or Next. A flat HTML page is generated that users reuse for each request, avoiding the need for both the server and the user to perform this rendering process.

SSG doesn't mean the website isn't dynamic. You can still use JavaScript to communicate with APIs and add sections with CSR/user-side rendering.

This generates compressed websites with a speed that's hard to match. The problem is that editing something on the website requires practically a complete redeployment.

This type of rendering provides a pleasant user experience, unparalleled security, scalability, and allows for any type of SEO implementation.

The concept is very similar to SSG, but improved. The only difference is that you can choose to defer certain pages or sections until a user makes a request.

For example, there may be pages with low traffic that do not need to be generated anew with each deploy.

It's simply a new twist that makes the SSG even better.

It's primarily a Gatsby concept.

Another twist on creating static pages using JavaScript allows developers to use SSG with all its benefits without having to rebuild the entire site when making changes. It's much more scalable for large projects than SSG.

Static pages can be generated on demand and allow for greater control. So, roughly speaking, it's the same as SSG, but without the major drawbacks.

We could say that it is very similar to DSG, but its main difference is that you can make certain pages not load until they are visited (not exactly, but similar to Server Side Rendering), so the rendering of the project is more staggered and better managed so as not to saturate the server.

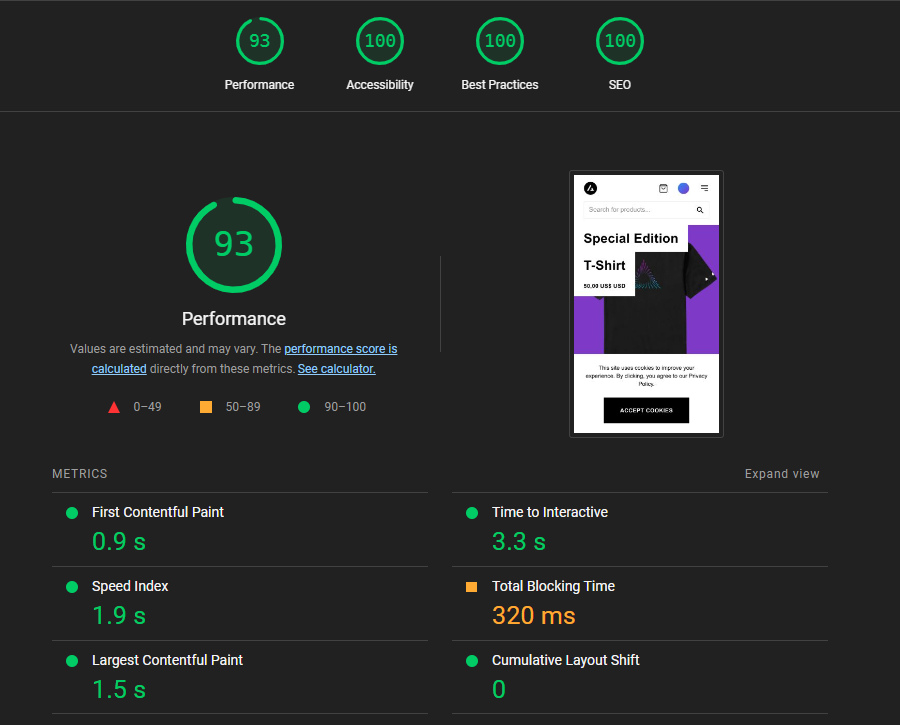

Here we can see a demo of the potential this type of rendering would have in e-commerce if we look at it from a performance perspective, taking into account that there is still significant room for improvement.

ISR would currently be the best strategy for a large page that may have many updates and that wants to be optimized for SEO, without wanting to die in the attempt when a Deploy is done.

Runs JavaScript on the client side. With proper optimization, pages can load very quickly, but you have to be careful with rendering.

Rendering happens server-side. It generates a new HTML with each request.

It's more load on the server and guarantees good rendering, but you may lose some advantages of using a JS framework (You can always hydrate the JS after the initial HTML delivery and have SPA-like behavior so you don't lose functionality).

It's doing CSR for the user, SSR for Google. It's not cloaking, but it can be inefficient or outdated, as it requires a lot of work, and Google currently renders JS.

Google advises against it because of the unnecessary effort it requires.

It may be complex to implement, but it allows certain elements to be rendered by CSR and others by SSR.

It consists of a pre-rendering process that runs before server requests, generating a plain HTML page that users reuse with each request.

The concept is very similar to SSG but improved.

It is mainly a Gatsby.js concept

The only difference is that you can choose to defer certain pages or sections until a user makes a request.

That is, it creates the page on demand when it is first accessed and then caches it as if it were SSG.

We could say that it is very similar to DSG, but its main difference is that you can make certain pages not load until they are visited (not exactly, but similar to Server Side Rendering), so the rendering of the project is more staggered and better managed so as not to saturate the server.

That is, it is like DSG but more widespread among different Frameworks and more customizable.

I currently offer advanced SEO training in Spanish. Would you like me to create an English version? Let me know!

Tell me you're interestedIf you liked this post, you can always show your appreciation by liking this LinkedIn post about this same article.