To set up the best Robots.txt on a website, you need to understand what robots.txt is

The robots.txt is a file that is constantly used in the world of SEO. In this article, we will see how to create a good robots.txt; however, before showing an infallible or high-quality robots.txt, it is necessary to go back to the basics: what it exactly is and what it consists of.

The robots.txt is a file that has been used since 1994 as a method to prevent certain Bots/Spiders/Crawlers/Trackers/user-agent (in this case, they all mean the same thing) from crawling part of a website. It is also used in an attempt to vary the crawl frequency, although due to the lack of standardization this is not achieved.

The robots.txt is a file with crawl recommendations that crawlers interpret at their own discretion.

In fact, it is important to emphasize that it is simply a text-format file that Crawlers may read and choose to follow. I emphasize “may”, because it does not prevent a website from being crawled in any case; it is simply a crawl guide for the bot, which it can ignore at will. Therefore, even if a User-agent is forbidden from crawling the website via robots.txt, it could still bypass it. For example Internet Archive ignores it.

The robots.txt is a file intended to be a standard. In fact, Google made significant efforts in 2019 to include it as a standard within the IETF, because not all bots read the file in the same way and each crawler interprets the directives it considers appropriate.

It is easy to wonder what use this file has if each user-agent does whatever it wants. But the reality is that the most well-known search engines such as Google, Baidu, Yandex, or DuckDuckGo do respect this standard, or at least the most important directives.

This allows us to control search engine crawling, potentially achieving an improvement in Crawl Budget.

The robots.txt is used to prevent crawling of the directories or files that we consider by certain search engines. This does not prevent indexing.

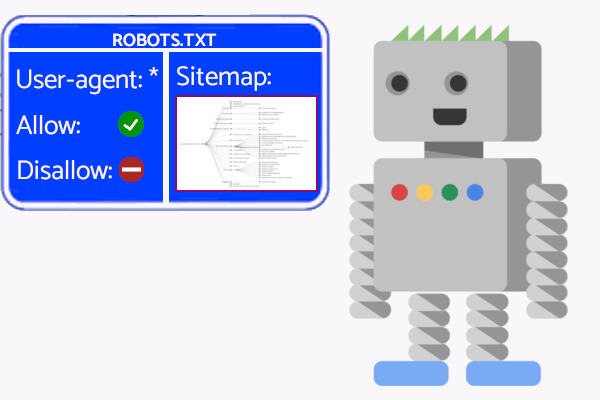

It is also used to indicate sitemaps to search engines, even when we have not specified them through Search Console or the Webmaster “Search Engine”.

We must avoid blocking the crawling of CSS files, JavaScript, or any file that may be critical to the performance or understanding of our website. At first glance, we might think this prevents search engines from wasting time on those files, but for example, we could prevent the search engine from loading the CSS that makes a website responsive.

On the other hand, a Disallow should never be used to prevent the crawling of 404 response codes or non-indexable pages, since Google will not be able to read either the response codes or whether the page is non-indexable, even if specified via the x-robots.

By order of importance.

# : Used to add comments that will not be interpreted by crawlers (so that only humans can understand each other).

Example:# This would be a comment inside a robots.txt file that would have no effect on crawlers.

$ : Used to indicate the end of a match. That is, to specify how the elements we mention must end.

Example:Disallow: /category/$

* : The textbook definition says “Designates 0 or more instances of each character”. Translated into plain English, it means “everything that goes here”.

Example:User-agent: *

Disallow: */example/*

The User-agent line identifies the crawler to which the rules apply. User-agents can be grouped to define rules collectively. Once directives are placed after the User-agents, the rules will apply to those grouped User-agents.User-agent: Googlebot

User-agent: Sogou

User-agent: sogou spider

User-agent: Screaming Frog SEO Spider

User-agent: Applebot

User-agent: DuckDuckBot

Allow: /

User-agent: 360Spider

User-agent: msnbot

User-agent: Twitterbot

User-agent: Pingdom.com bot

User-agent: baiduspider

User-agent: Yahoo! Slurp

Disallow: /

In this example, we can more easily see/understand how it works if we assume that the green ones are allowed to crawl and the red ones are not, since directives apply based on the User-agents listed above until another directive appears.

Unofficial list of user-agents

This is the directive that gives meaning to robots.txt; it is used to specify the paths that bots should not be able to crawl.

If there is no Disallow, crawling is allowed by default, but this directive allows us to permit content within Disallow restrictions.

In case of conflict, the most permissive directive wins.

Used to tell all crawlers where your Sitemaps are located. It is one of the most useful commands within robots.txt itself and is immune to the User-agent command. That is, it can even be placed at the very top and will be read by all crawlers.

For the more curious readers, I add my article: directives that are not read by Google.

The robots.txt must always be located in the root folder of a project and must be named robots.txt

On a technical level, I recommend Google’s own step-by-step guide on how encoding works within robots.txt, as it is very comprehensive.

I recommend using the Google Robots.txt Tester, the one from Merkle, or even Yandex’s own tool. In fact, the ideal approach is to build the robots.txt within these tools so you can verify that it has the desired effect.

The entire introduction leads to this question. It is quite common to crawl pages and find: “the best robots.txt for WordPress | Shopify | whatever technology”. And the reality is much simpler.

The robots.txt is a tool used to block crawling by crawlers that interpret it (for example, the most well-known search engines). Therefore, it should be used for that purpose and for the Sitemap.

The best robots.txt is the one that prevents crawling of what you want to prevent from being crawled, if you actually want to prevent something from being crawled.

That is to say, just because it is a technical aspect of web positioning does not mean you need to create a giant robots.txt file. In many cases, the smartest option is to leave it empty and allow the crawling of the entire website.

So the only thing your robots.txt should contain is a Disallow for the URLs that you do not want a search engine to waste time crawling.

If there are none, nothing else should be added. This way, time can be spent on other more useful and necessary SEO tasks.

It makes sense when we want to block the crawling of pages that generate zero interest for us or that could hurt our crawl budget.

It also makes sense to block certain directories that can generate errors and confusion for bots. For example, many Cloudflare products generate a directory called /cdn-cgi/, which should be blocked to avoid errors or issues.

The human.txt does not have much to do with robots.txt, but I find it a nice and interesting initiative. Since we take the time to create robots.txt files for bots, and many times completely useless ones, at least we can use this initiative to indicate the people who worked on that page.

I currently offer advanced SEO training in Spanish. Would you like me to create an English version? Let me know!

Tell me you're interested