A guide on how to prevent access to certain user agents through the server

A common SEO practice is to block access to various user agents. Sometimes, this is because we want to prevent crawling, indexing, or the overload of requests that unnecessarily consume excessive server resources, or because we need to block certain users or bots that we do not want to crawl our site.

It is true that in many cases, this is necessary, but it is often done incorrectly by applying these directives through the robots.txt, which is a standard that any crawler can easily bypass if they choose to. If you don't want a page to be indexed, Google can still index pages blocked by robots.txt; if you want to prevent crawling or overload, it can be valid as long as the user agent decides to respect the robots.txt, which is not always the case since each user agent respects or interprets it as they see fit.

Because of this, the best practice is usually to block through the server, as this cannot be bypassed by any bot using the specified user agents, and in the case of search engines like Google, seeing a 403 response code will not only stop them from crawling but also prevent them from indexing the page.

I always recommend customising error codes in case a user accidentally encounters them.

As you know, I like to show commands both in Apache and in Nginx. So, let's look at the options in both environments.

I recommend placing these codes as high as possible in the .htaccess file, as they will be vital for browsers when making a request.

Generally, if we want to block access for a user agent to all content, we will want to block more than one. It is also possible that the user agent may contain spaces, which will be represented with .* due to regex; although there are more commands that allow for space selection, these are also valid. Therefore, in this code, I’ll provide an example to block multiple user agents, some of which have spaces in their names:RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} ^.* (Screaming.*Frog.*SEO.*Spider|Xenu's|WebCopier|URL_Spider_Pro|WebCopier.*v3.2a|WebCopier.*v.2.2|EmailCollector|Zeus.*82900.*Webster.*Pro.*V2.9.*Win32|Zeus|Zeus.*Link.*Scout|Baiduspider-image|Baiduspider).*$ [NC]

RewriteRule ^.*$ - [F,L]

What this code essentially indicates is that, on the second line, those who meet the condition of being the user agent specified below will be subjected to the following rewriterule.

So in regex, with the | symbol, we indicate alternatives, allowing us to select all those in the list.

This Rewriterule, by placing the [F,L] flags, will generate the desired 403 response code, but remember that you can use it even to generate other types of redirects if that is useful to you.

If you want to block a specific directory from a user agent, you can follow the instructions I give about selecting a URI or parameter for a rewriterule.RewriteEngine On

RewriteCond %{REQUEST_URI} ^/no-buscadores/ [NC]

RewriteCond %{HTTP_USER_AGENT} ^.*(Googlebot|Bingbot).*$ [NC]

RewriteRule ^.*$ - [F,L]

This way, everything within that subdirectory will not be crawlable or indexable by search engines.

In the case of Nginx, within nginx.conf inside server{} it would be done with a conditional:if ($http_user_agent ~ (Xenu's|WebCopier)) {

return 403;

}

To block it within a directory, it would need to be placed inside a location block:location = /no-rastreo/*{

if ($http_user_agent ~ (AhrefsBot)) {

return 403;

}

}

Remember, as always, that you need to reload the Nginx configuration for this to take effect.

Although I’m accustomed to working with the codes I present and always verify them to ensure I publish error-free examples, something could always slip through, or it may not be edited correctly. So I always recommend testing the codes in a staging environment before implementing them on the production website.

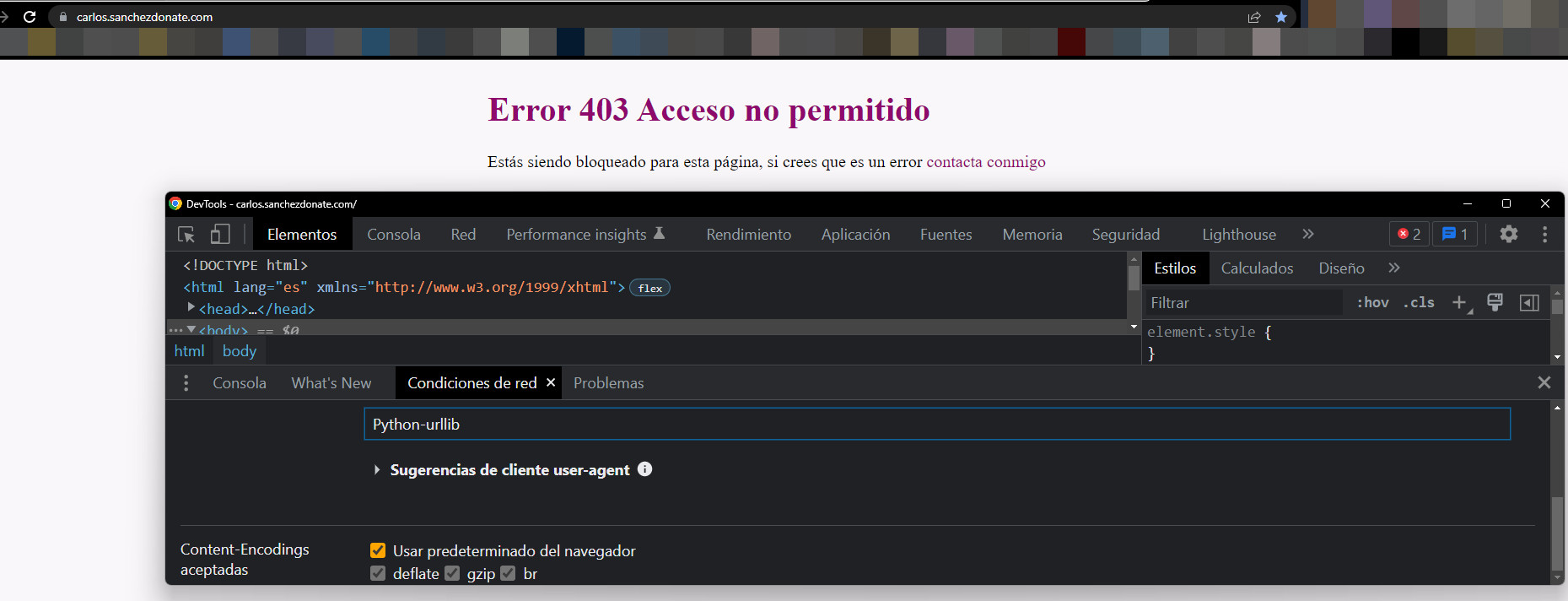

To check if the page is blocking a specific user agent, we need to go to the inspect element tool.

In Chrome, we can do this by right-clicking > Inspect, or using the quick shortcut Control + Shift + I.

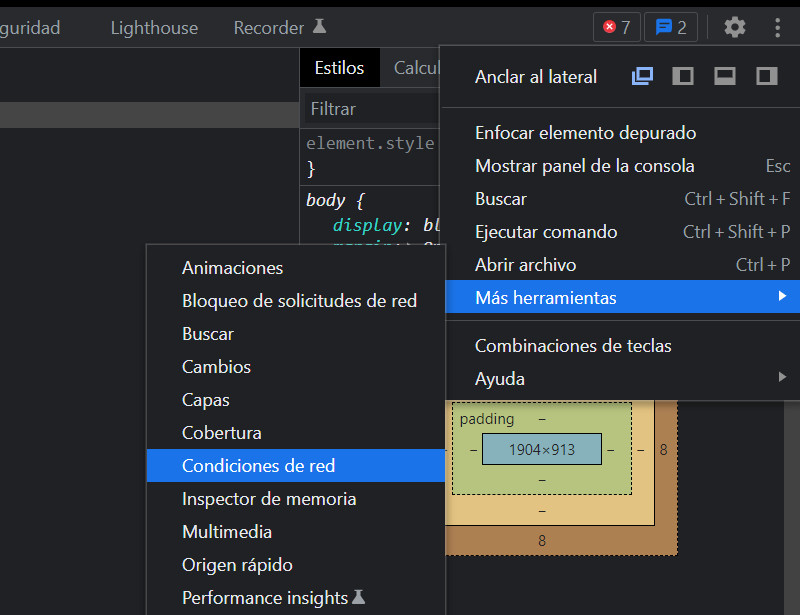

Regardless of how it appears, go to the three vertical dots at the very top right, click, go to more tools, and select Network Conditions.

Once we've done this, we’ll see options to change cache storage, network limitations, and user-agent. Here, we can enter the user agent we want and perform the check.

We also have the option to perform this test with Screaming Frog.

On the other hand, since we’re dealing with user agents on the server, we may want to block access so that nothing is crawled or indexed.

We can create a user agent and only allow it permission through negation:

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} !(AllowedUserAgent) [NC]

RewriteRule ^.*$ - [F,L]

if ($http_user_agent !~* (AllowedUserAgent)) {

return 403;

}

Remember, as always, that you need to reload the Nginx configuration for this to take effect.

I currently offer advanced SEO training in Spanish. Would you like me to create an English version? Let me know!

Tell me you're interested